Dear readers,

A few months ago, two of the most prolific podcasters of all time reached out to me with a simple message: We have an incredible story, and we think your audience at Tangle should hear it.

The podcasters, Andy Mills and Matthew Boll, are sharp journalists with an eye for important investigations. Mills is the co-creator of The Daily at The New York Times, the most listened-to news podcast in the world, and he produced the successful limited series Rabbit Hole (on internet algorithms). Boll helped build the podcasting company Gimlet and worked on award-winning podcasts like Crimetown. Together, they created two of my favorite podcasts of the last few years, The Witch Trials of J.K. Rowling and the documentary-style podcast Reflector. So when these two guys told me they had a story I had to hear to truly understand, I had to find out more.

The story is about a debate — the debate of all debates, according to some of our country’s smartest people — whose outcome could literally determine the future of the human race:

How serious is the threat from artificial intelligence?

It turns out the answer to this question is not nearly as straightforward as one might hope. The debate is now setting some of the smartest, wealthiest, and most influential people in the world against each other, with no clear traditional political dividing lines and even less clarity on whose argument might win the day.

Since March, Mills and Boll have been investigating this debate along with a team of journalists from their investigative reporting outlet, Longview, including former NPR correspondent and podcast host Gregory Warner. They decided to share their notes, rough cuts, and behind-the-scenes discussions exclusively with our team. It is precisely the kind of balanced, curious reporting we strive to do here at Tangle — peeling back the layers of the biggest stories of our time.

Today, we’re revealing that story to you, in written form, and in partnership with Longview. We also encourage you to go listen and subscribe to their podcast series, The Last Invention, which is the most riveting deep dive on artificial intelligence I’ve come across yet.

Best,

Isaac

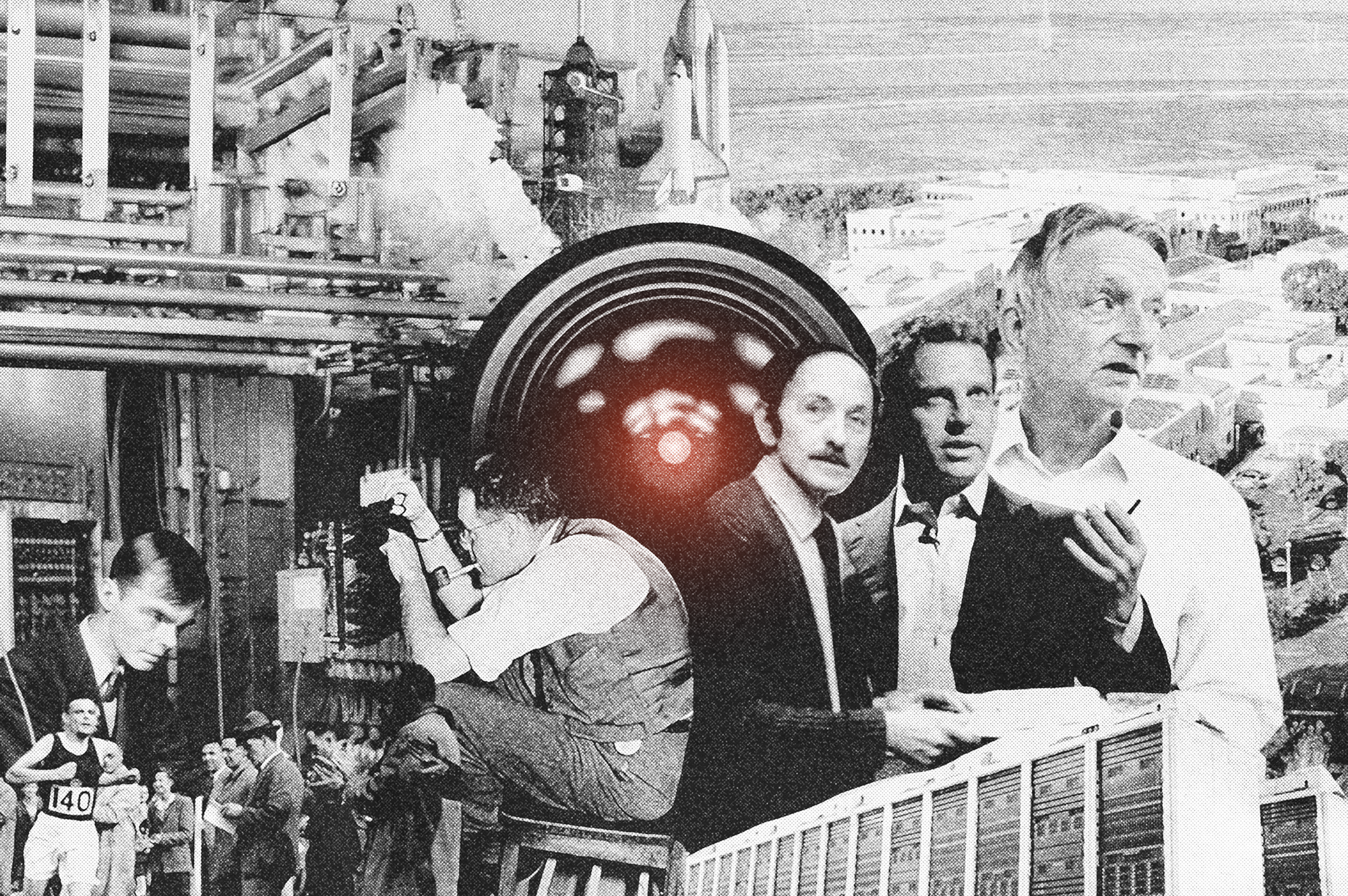

The history.

In 1940, in the midst of World War II, the Allied nations had a problem: They had to crack a code.

Germany had effectively cut off supply lines between the U.S. and Great Britain using its advanced submarines, known at the time as “U-boats.” The Allies knew they could change the course of the war if they could find a way to evade the U-boats, as they didn’t have the naval technology to attack them head on. So they rested their hopes on a bold strategy: cracking the “Enigma code” that the Nazis used to communicate with the submarines.

At this time in history, codebreaking was a human endeavor that demanded days, weeks and sometimes even years, involving massive manual efforts to intercept and decode communications among rival militaries. But the Enigma code was far more complex than anything these codebreakers had ever seen, and the Allies didn’t have enough manpower to figure it out on the compressed timeline required to win the war. Thousands of soldiers were dying and hundreds of ships were being sunk as months passed with no success at cracking Enigma.

That was until a team of mathematicians, led by English logician Alan Turing, developed a giant electromechanical calculator at Bletchley Park, the secret codebreaking center in the United Kingdom. Turing, with the help of researchers across the world, used this giant calculator — called the Bombe — to sift through German Enigma messages that were encrypted with roughly 150 quintillion combinations of rotors, plugboard connections, and shifting letters.

Turing and his team of codebreakers used the Bombe to eliminate impossible combinations and ultimately crack the Enigma code. And, as you probably know, the United States was eventually able to cross the Atlantic to participate in the massive Allied amphibious assault on Europe on D-Day, putting troops on the ground in Nazi-occupied France and leading to the eventual defeat of the Nazis.

All the way back then, in 1940, Alan Turing was considering a day when this giant computer he had helped build would evolve to be so smart — and so advanced — that it could think for itself. By 1951, six years after the end of World War II, Turing predicted that machines would one day become capable of even more advanced thinking than humans, gain the ability to direct their own improvement, and then leave humanity behind. His view wasn’t simply that artificial intelligence would be the next great technology — the last invention, so to speak — but that it would actually create an entirely new species.

This view was the inspiration for the Turing Test, Turing’s famous theoretical method for distinguishing between human and artificial intelligence. The test involves a human, a computer and a judge, with the judge posing questions designed to differentiate computer responses from human ones. Many of Turing’s contemporaries were skeptical of the idea that computers could “think,” but Turing shifted the conversation. As he put it: “The question, ‘Can machines think?’ should be replaced with, ‘Can machines do what we (as thinking entities) can do?’”

At the time, Turing was relatively relaxed about all this. Yes, he believed that these thinking machines would one day become their own species and leave humanity in the dust. Yes, he believed these machines would be able to talk to each other, learn, improve, and exceed humans at nearly any task. But he cautioned that all of this was all very, very far away — and he developed the Turing Test as the canary in the coal mine for the future, to warn us when it arrived.

Today, the machines Turing imagined are here. And they can pass some versions of Turing’s test. At least… that’s what ChatGPT told me.

The threat.

First, a few definitions and differentiations. The consumer tools most people might think of as “artificial intelligence” — large language models (or LLMs) like ChatGPT, Claude, Gemini, or Grok — aren’t what worried technologists think of when they imagine the future of AI. Chatbots like these are, in some ways, just an easy way for tech companies to turn a profit on their technology, gather users, and evolve the way people seek answers on the internet (that used to be done through a Google search).

Of course, chatbots are no small part of this moment: The artificial intelligence industry is now propping up the entire stock market, with an AI frenzy pushing record after record on Wall Street. Goldman Sachs CEO David Solomon is warning about a potential AI bubble and inevitable stock drawdown, while policymakers in the White House are shrugging off the threat of a slashed labor force because they believe these technologies will make human labor less necessary.

Still, this is not the threat that keeps alarmists up at night; instead, it’s realizing that these LLMs can learn, which shows a kind of malleable intelligence. The most concerned AI experts frame the moment more like this:

We know that people will start building relationships with these chatbots — using them for everything from therapy to math homework to administrative tasks. In fact, that’s already happening, and it can come with dire consequences. We know, too, that technologists like Elon Musk are already developing humanoid robots that will probably constitute the next generation of laborers. It isn’t hard to imagine a world where in, say, 10–20 years, humanoid machines are working 24/7 in factories with no breaks and no salary. The people at the cutting edge of this technology are certain that this future is around the corner.

The question that really keeps them up is, What don’t we know? Job losses and human-machine relationships are a given. But when you consider all the ways the internet evolved in ways we never expected, concerns about job losses or teens talking to robots feel almost meaningless — especially when contrasted against a future where humans live alongside an entity that will look at us the same way we look at ants.

This group, the one with a deep sense of fear and foreboding about the future, we’ll call The Doomers. They are one of three groups Mills, Boll, Warner and the Longview team identified in their reporting, alongside The Accelerationists and The Scouts.

What The Doomers say.

Though The Doomers sometimes disavow this label (many prefer the term “realists”), they believe that we need to stop the AI industry from going any further, and we need to stop it right now. This camp, which includes many current and former technologists in the AI space, believe our choice is pretty simple: If there is even a small probability that one day a superintelligent machine will view us the same way we view an insect, and we are actively working to invent that machine right now, we should pull the plug on the experiment.

Connor Leahy, a computer scientist and well known activist in The Doomer camp, put it this way:

“I think it should be illegal. It should be logically illegal for people and private corporations to attempt, even, to build systems that could kill everybody.”

Along with Leahy, one of the most prominent members of The Doomer camp is Eliezer Yudkowsky, who co-founded the Machine Intelligence Research Institute and garnered praise for his recent book, If Anyone Builds It, Everyone Dies. Interestingly, a number of Doomers have joined the camp after leaving jobs where they were working directly on the advancement of artificial intelligence. Yudkowsky, for example, was initially funded by Peter Thiel to investigate how to develop AI, but after years of research concluded that there was no way to do so that wouldn’t lead to the destruction of humanity.

What The Accelerationists say.

The Accelerationists are a broad coalition that, generally speaking, believes AI will be a powerful force for good that solves some of our most pressing problems, from political polarization to climate change. Therefore, they say, we should excitedly accelerate AI technology as quickly as we can, and find ways to integrate it into daily life that improves the world for all of us.

Some in The Accelerationist camp think fears about AI have been overblown and attribute those fears largely to the “safetyist” culture that now permeates many areas of American life. To many Accelerationists, fears of AI taking over the world are just downstream of a cultural mindset that prioritizes physical, emotional, and psychological safety — the same culture that gave us “microaggressions” and “safe spaces.” Others acknowledge the risk of advanced AI, but want to accelerate progress because they think the upside is worth the risk or because they worry about what would happen if the wrong people advance AI while the “good guys” try to pump the brakes.

Interestingly, The Accelerationists constitute a politically diverse camp of adherents. The aforementioned Thiel, a famous libertarian and Trump supporter, is an Accelerationist who thinks AI can break an innovation stagnation that’s taken hold in the West. Reid Hoffman, one of the biggest Democratic donors in the country, is an Accelerationist who thinks AI will be a massive boon to the economy. Marc Andreessen, a longtime Democrat turned Trump supporter, believes technological breakthroughs like AI can lead to expansions of rights and wealth. Sam Altman, a former Trump critic and foe of Elon Musk who has generally backed Democratic causes, views the advancement of AI as fundamentally aligned with his values as a social progressive — ultimately thinking it will free people from work they don’t want to do and create abundant clean energy.

It’s a fascinating collection of thinkers who illustrate how the issue of AI has not yet become a left-right wedge issue.

What The Scouts say.

The Scouts, for lack of a better analogy, are vaguely akin to the centrists or moderates. It’s a term Mills came up with to distinguish them from The Doomers, and to draw a comparison to the Boy Scouts (for their motto: Be prepared). While many Accelerationists lump Scouts in with The Doomers, The Scouts do seem to be carving out their own lane.

Scouts believe, in effect, that it’s probably impossible to stop advanced, superintelligent AI from getting built and also that the potential benefits from that AI are so great that it might even be wrong for us to stop it. What happens if we limit these technological advancements and end up depriving Americans of a way to work less? Or accrue more wealth? Or cure intractable diseases? These questions are atop the Scouts’ minds.

They also worry. They focus on building a movement of people throughout society to get ready for what’s coming down the road; part of that is ensuring that the AI we make is safe, and that politicians stop fighting over the typical dramas of the day and turn their attention wholly to this matter — which they believe is a major, history-defining question.

Among The Scouts are the game theorist and poker player Liv Boeree and William MacAskill, a philosopher and originator of the effective altruism movement. Boeree and MacAskill want universities, research labs, and the media to help direct the future of AI — not just tech companies. They want more outside regulation and more eyes on what is happening behind closed doors at these tech companies.

Perhaps the best known example is Geoffrey Hinton, who won a Nobel Prize for his work in artificial intelligence and then left Google to speak freely about the risks he saw ahead, including the possibility that “digital intelligence” would “take over from biological intelligence.” Yoshua Bengio, the most cited living scientist on earth and one of three “godfathers” of AI, also falls into this camp. After decades of dedicating his life to advancing AI, he is now doing everything he can to slow it down.

And funnily enough, The Scouts share one common view with both the Doomers and The Accelerationists: We can’t wait. We need to start preparing right now.

The Last Invention.

Debates like these are not new in American politics. What is new, in this case, are the stakes: If what the Doomers believe is true, then the very existence of humanity is at stake, and it’s critical we proceed with caution — and even abandon more innovation with artificial intelligence — to preserve our species.

If what The Accelerationists say is true, then unlocking the future of AI could bring about a more utopian, advanced society of prosperity. Failing to innovate in this space, especially if other countries (like China) beat us to it, could mean we end up technologically inferior on the world stage.

Or, perhaps, it’s somewhere in the middle, and we should follow the advice of The Scouts and pick and choose our next advancements intentionally.

In their new podcast, The Last Invention, the team at Longview dives deep on this question — how we got here, what the future holds, and which arguments will shape it. We’re proud to be partnering with them to share this story with you, and we encourage you to subscribe to The Last Invention, hosted by Gregory Warner, and listen to Episodes 1, 2, and 3, which are now available anywhere you get your podcasts.

Member comments